Supporting large-scale data transfers on the Janet Network

Explore the technical challenges and best practices involved in moving large volumes of data between sites on Janet and beyond.

Introduction

Jisc members and customers increasingly need to transfer large volumes of data over the network, perhaps to move science or research data from the point of capture to a compute facility, to visualise the data, to back it up, preserve it and make it rediscoverable, or to support collaborative research in some way.

The Janet Network provides connectivity to a wide range of research and education organisations across the UK. While Jisc can provide connectivity at various scales depending on need and budget, there are many factors to consider, and best practices to be aware of, for you to make optimal use of that connection.

Jisc already connects some of our more data-intensive science organisations to the Janet backbone at 100Gbit/s, and many others have multiple tens of Gbit/s of capacity. But whatever the size of the connection, there are good principles to be aware of to make the most of its capacity to move data.

This guide is aimed at teams responsible for building network research infrastructure on campuses but could be useful for researchers or other campus staff. We talk about science and research data and use those terms interchangeably.

Expectations

It’s useful for researchers to have an idea of realistic expectations for moving data over the network. What are the data transfer rates required to move a given amount of data in a given period?

Some theoretical examples of throughput can help. For example, to move 1TB of data in an hour requires roughly 2Gbit/s of throughput. To move 100TB in a day needs the best part of 10Gbit/s to be available. It’s useful to note that networking specialists tend to think in bits per second while researchers tend to think in bytes per second, where a byte is 8 bits.

These are theoretical figures - in practice, there will likely be other traffic sharing the same network links and there may be some limitations in the network elements (like campus firewalls), software tools used (plain old file transfer protocol is not the best) or end systems (perhaps with poor disk I/O performance) that can affect the overall throughput achieved.

Some researchers may have a bad first experience trying to copy data to a remote site and be put off trying again, when the cause may be addressed through consultation with their local IT support. It’s important that researchers don’t unnecessarily turn to shipping data by physical media when the Janet Network is available and has the potential to significantly improve the efficiency of their workflows.

This guidance explains how to optimise performance across a range of constraints.

Best practice

As the need to move ever larger volumes of data has grown, best practice has evolved over time. The CERN Large Hadron Collider (LHC) experiments have been moving large data volumes for many years. They routinely move many petabytes of data around the world to support distributed computation on what’s known as the Worldwide Large Hadron Collider Computing Grid (WLCG).

Research infrastructures which participate in the CERN LHC experiments are generally well set up to optimise data flows. While the WLCG has dedicated fibre optic links for traffic between its main sites (LHCOPN) and an overlay network for many others (LHCONE), their many years of experience has led to the necessary knowledge for efficient data transfers being developed and shared.

That knowledge is applicable to all research and education networks - it is important that other science and research communities are made aware of and adopt such practices.

The Science DMZ

These good practices have been written up by ESnet, the US Energy Sciences network, in a model they refer to as the Science DMZ. This name has caught on, even though the principles can be used for moving any data over a network (not just science data) and the DMZ, or 'demilitarised zone', of the local site network is used to optimise performance rather than be focused on its usual security function.

A number of Janet-connected sites have already adopted the principles described in the Science DMZ model, and many, including the UK GridPP sites participating in the CERN experiments, did so by learning from experience before ESnet put the model to paper.

The basic premise of Science DMZ is that a site’s science or research traffic should be handled differently from the day-to-day business traffic. A one-size-fits-all approach is unlikely to give the performance that large-scale data transfers need while supporting the necessary security measures that typical email or web usage requires.

The aim is to provide friction free networking for science traffic, using highly capable network devices to minimise and ideally eliminate packet loss, which in turn maximises the throughput of data transfer tools which use the TCP transport protocol.

The TCP protocol, used for reliable delivery of data over the Internet, will retransmit data if packets are dropped in transit, and slow down the sending rate on the assumption that drops are caused by congestion. Just a fraction of 1% packet loss can reduce throughput significantly for standard currently available TCP protocols, particularly when transfers are run over long distances with high packet round trip times (RTTs).

There are four main principles to the Science DMZ model:

- A network architecture explicitly designed for high-performance applications, where the science/research network is distinct from the general-purpose network

- The use of dedicated, well-tuned systems for data transfer, ie data transfer nodes (DTNs), with performant disk input/output and data transfer software

- Network performance measurement and testing systems that are used to persistently characterise the network over time and that are available for troubleshooting; perfSONAR is cited in the Science DMZ guidance and is commonly used for this purpose including extensively on the WLCG

- Security policies and enforcement mechanisms that are tailored for high performance science environments

Achieving optimal end-to-end performance is not just about the capacity of the network links - all the elements in that end-to-end chain must be considered and by both sites involved in the data transfer.

Read more about the Science DMZ model, including tips on end-to-end and network performance. There is also the original paper describing the Science DMZ principles (pdf) which continues to be good reading today.

Local network architecture

A traditional campus network typically has all its internal systems behind one or a pair of resilient stateful firewalls which support intrusion detection, deep packet inspection, and similar advanced security functions that are important for general web, mail, and Internet traffic.

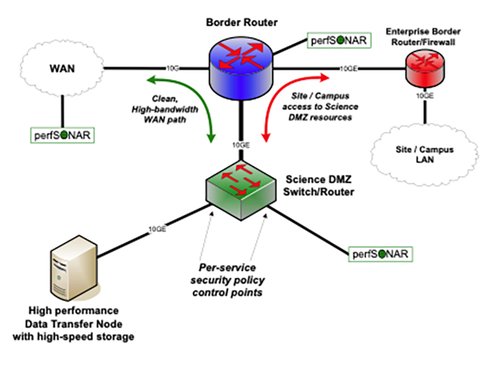

The Science DMZ architecture sees the science traffic instead routed to a separate physical or logical 'on ramp' to the campus network, such that data transfers to or from the campus route directly at the campus border router to the DTN(s), and do not pass through the main campus firewall, as illustrated by the figure below.

Text description for example of Science DMZ network architecture graphic

This diagram shows a typical Science DMZ architecture. The site border router provides connectivity to the Internet, routes general campus traffic through the campus firewall to the internal campus network, but also has an interface that connects directly to the Science DMZ, in which the data transfer node(s) are hosted, ideally along with a perfSONAR measurement server. A switch-router device in the Science DMZ can apply per-service security filtering via access control lists (ACLs).

This diagram shows a typical Science DMZ architecture. The site border router provides connectivity to the Internet, routes general campus traffic through the campus firewall to the internal campus network, but also has an interface that connects directly to the Science DMZ, in which the data transfer node(s) are hosted, ideally along with a perfSONAR measurement server. A switch-router device in the Science DMZ can apply per-service security filtering via access control lists (ACLs).

The figure presents the conceptual architecture of the model, where data to be transferred to remote peers is sent to/from the DTN(s). The architecture is designed to minimise and ideally eliminate potential causes of packet loss that can seriously degrade TCP performance.

When incoming data is received by a DTN it may then need to be moved on to an internal research file store. Here, there is a trade-off or balance to be struck. While a local transfer via the campus firewall may give reasonable performance due to the very low round trip time (RTT) from the Science DMZ to the internal network, it is common for Science DMZ sites to use disk mounts to the DTNs. In such cases, the link used for the mount (be it for example NFS, GPFS or otherwise) can be secured on the file store server(s) or via network filtering to only let those mount protocols pass, and thus not potentially become a back door for remote access via tools such as ssh.

Data transfer nodes (DTNs) and software tools

The DTNs should be well-tuned devices with good hardware I/O and appropriately set buffer memory for transfers. TCP buffer sizing is particularly important for transfers to more distant servers. ESnet’s FasterData site is a useful source of advice on hardware selection.

While reasonable performance can be achieved in some cases with simple software tools such as the Unix rsync tool, it is likely that higher throughput will require the use of more advanced software such as Globus. The WLCG has recently moved towards using XRootD and WebDAV.

Appropriate security implementation

It is important to note that a Science DMZ is not a firewall bypass, rather the point is to use performant technologies to apply security policies for science traffic such that the data transfers required for the research workflows to be conducted effectively are not impaired by performance issues.

To achieve this, the systems within the Science DMZ should be configured to only run the minimal tools, software and protocols required, and the implementation of policy should use performant methods. In practice, this means the goal is typically to have a Science DMZ that can be reasonably defended by a stateless firewall or a set of ACLs, generally without the in-line deep packet / intrusion inspection deployed for business (email, web browsing) traffic.

Measuring performance

Should a data transfer suffer from poor performance, it is important to be able to troubleshoot the issue effectively. While it is possible to use throughput test tools like iperf after a problem arises, and to run traceroute to check traffic paths and ping for packet loss to a given destination, it is far more useful to have network characteristic data already collected, going back over time, to call on for that diagnosis.

Has there been a steady rise in packet loss, a change in route, or a more sudden drop? How do the measurements to the problematic site compare to measurements over time to other sites? This is why running a persistent monitoring tool is important. The open source perfSONAR tool is widely used for measuring network characteristics over time, with hundreds of perfSONAR nodes in use by the WLCG.

Network characteristics are only one part of the story. While they may point to a specific network issue, it is important to ensure that application logs are kept; tools such as FTS, used by the WLCG, keep logs for a certain period. Host or server-based tools can also be particularly useful for identifying server performance bottlenecks.

Building on Science DMZ

The Science DMZ model is an enabler. In addition to helping large data transfers run faster, it can also be a cornerstone of providing the best access to a site’s repository where experimental data sits alongside the papers produced from it, as described by the Modern Research Data Portal.

It is important to track new tools and developments that may further enhance best practices. For example, new versions of TCP may emerge that perform better when encountering packet loss, with TCP-BBRv2 being a promising example as reported at TNC22 (pdf). But the general principle of a distinct science enclave for your network will remain beneficial.

If you engage with the researchers on your campus, discuss their requirements and ensure expectations are well set, then you are making the most important first steps to a positive outcome, and it will be much less likely researchers resort to moving data around unnecessarily on hard disks, and the data movement aspects of their workflows can be optimised.

Undertaking network forward looks can help you understand your future Janet connectivity requirements. Such exercises should draw on the data movement requirements you collect from your researchers and allow you to have timely discussions with Jisc about capacity when needed.

How we can help you

Jisc can provide assistance in a variety of ways to sites seeking to optimise large-scale data transfers. We offer general advice and guidance on Science DMZ, deploying perfSONAR, and general network or end-to-end performance topics and issues. We also provide a network/data transfer performance testing facility, which includes an iperf endpoint, a perfSONAR reference node that you can test against, and a DTN supporting a variety of data transfer tools, including Globus. Members and customer are welcome to use these facilities, as follows:

- iperf: test to endpoint iperf-slough-10g.ja.net

- perfSONAR: the throughput endpoint is ps-slough-10g.ja.net and the latency endpoint is ps-slough-1g.ja.net

- DTN: the specific application endpoint will vary; please contact us for specifics

The current test platforms hosted at our Slough DC support tests up to 10Gbit/s. A facility supporting tests up to 100Gbit/s is planned to go live in London later in 2022.

We recommend that any Janet-connected site deploy a perfSONAR node to ensure persistent measurements of network characteristics over time are collected. The best places to host such a device are alongside the storage device(s) data are regularly copied to or from, and/or at the campus edge, ideally on your campus edge router.

This configuration enables relative comparisons of network measurements to/from your storage nodes and to/from your campus edge. Jisc can provide assistance in deploying perfSONAR, or potentially loan a perfSONAR small node that can run throughput tests of up to 1Gbps. Jisc can also host perfSONAR measurement meshes for communities, allowing deployed nodes to have a minimal configuration with the measurement data held centrally.

Further information

- If you have general queries about the capacity of your connection to Janet, you should contact your relationship manager, or email help@jisc.ac.uk. Your site should have access to see traffic utilisation to Janet through Netsight

- There is an end-to-end performance email list e2epi@jiscmail.ac.uk where Jisc or community people may answer questions. You can join the E2EPI mailing list via JiscMail

- Download the Janet end-to-end performance initiative case study (pdf).